Directly generating 3D meshes, the default representation for 3D shapes in the graphics industry, using

auto-regressive (AR) models has become popular these days, thanks to their sharpness, compactness in the

generated results, and ability to represent various types of surfaces. However, AR mesh generative models typically construct meshes face by face in lexicographic order,

which does not effectively capture the underlying geometry in a manner consistent with human perception.

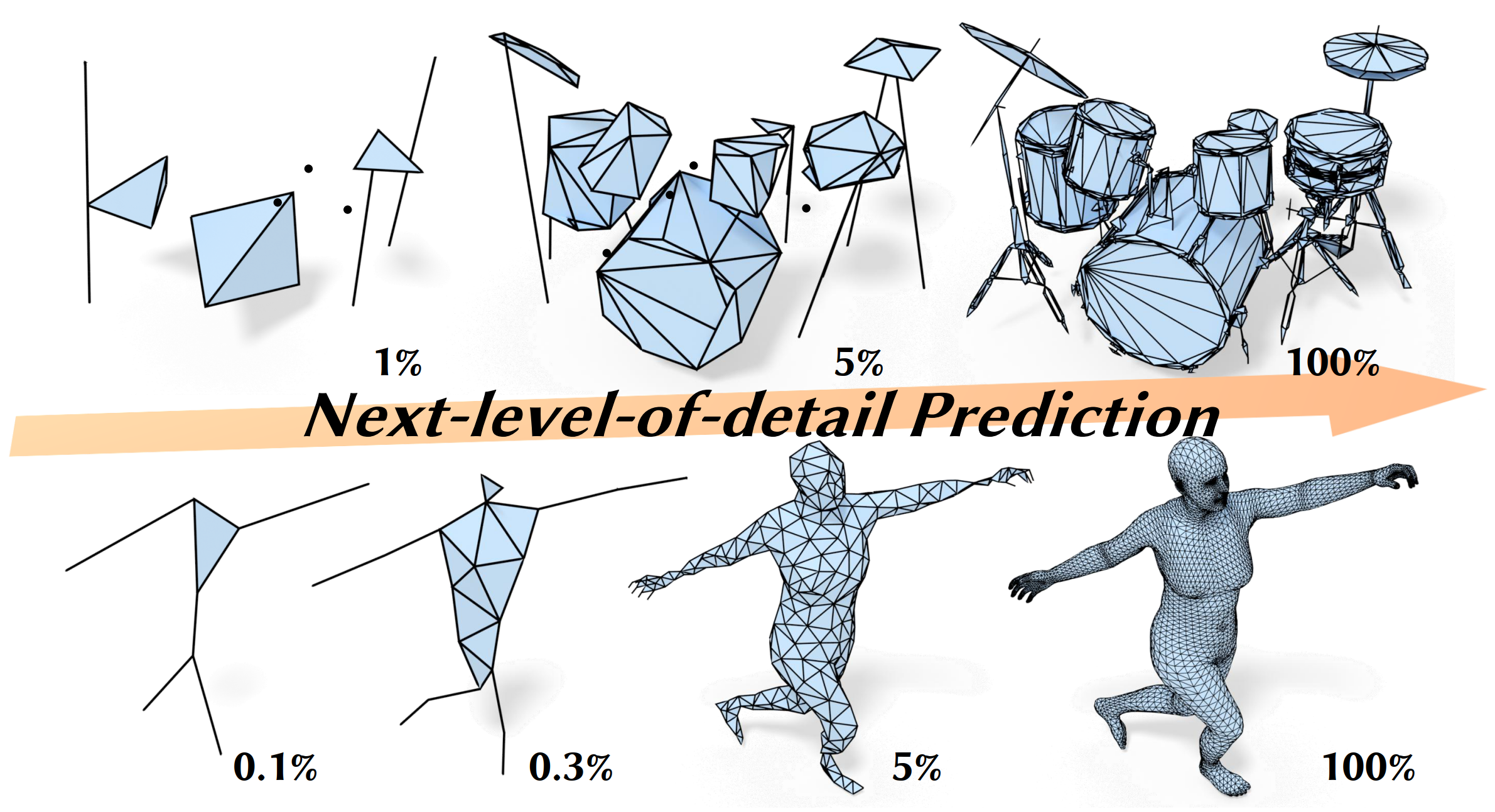

Inspired by 2D models that progressively refine images, such as the prevailing next-scale prediction AR

models, we propose generating meshes auto-regressively in a

progressive coarse-to-fine manner.

Specifically, we view mesh simplification algorithms, which gradually

merge mesh faces to build simpler meshes, as a natural fine-to-coarse process. Therefore, we generalize

meshes to simplicial complexes and develop a

transformer-based AR model to approximate the reverse process of simplification in the order

of level of detail, constructing meshes initially

from a single point and gradually adding

geometric details through local remeshing, where the topology is not predefined and is

alterable.

Our experiments show that this novel progressive mesh generation approach not only provides intuitive control over generation quality and time consumption by early

stopping the auto-regressive process but also enables applications such as mesh refinement and

editing.